Welcome back! This is the second post of a series which turned out to be more occasional than I thought it would be. You might remember that I originally called it December of Rust 2021. Look how that worked out! Not only is it not December 2021 anymore, but also it is not December 2022 anymore.

In the previous installment, I wrote about writing a virtual machine for the LC-3 architecture in Rust, that was inspired by Andrei Ciobanu’s blog post Writing a simple 16-bit VM in less than 125 lines of C. The result was a small Rust VM with a main loop based on the bitmatch crate.

By the end of the previous post, the VM seemed to be working well. I had tested it with two example programs from Andrei Ciobanu’s blog post, and I wanted to write more programs to see if they would work as well. Unfortunately, the two test programs were tedious to create; as you might remember, I had to write a program that listed the hex code of each instruction and wrote the machine code to a file. And that was even considering that Andrei had already done the most tedious part, of assembling the instructions by hand into hex codes! Not to mention that when I wanted to add one instruction to one of the test programs, I had to adjust the offset in a neighbouring LEA instruction, which is a nightmare for debugging. This was just not going to be good enough to write any more complicated programs, because (1) I don’t have time for that, (2) I don’t have time for that, and (3) computers are better at this sort of work anyway.

In this post, I will tell the story of how I wrote an assembler for the LC-3. The twist is, instead of making a standalone program that processes a text file of LC-3 assembly language into machine code1, I wanted to write it as a Rust macro, so that I could write code like this, and end up with an array of u16 machine code instructions:

asm! {

.ORIG x3000

LEA R0, hello ; load address of string

PUTS ; output string to console

HALT

hello: .STRINGZ "Hello World!\n"

.END

}(This example is taken from the LC-3 specification, and I’ll be using it throughout the post as a sample program.)

The sample already illustrated some features I wanted to have in the assembly language: instructions, of course; the assembler directives like .ORIG and .STRINGZ that don’t translate one-to-one to instructions; and most importantly, labels, so that I don’t have to compute offsets by hand.

Learning about Rust macros

This could be a foolish plan. I’ve written countless programs over the years that process text files, but I have never once written a Rust macro and have no idea how they work. But I have heard they are powerful and can be used to create domain-specific languages. That sounds like it could fit this purpose.

Armed with delusional it’s-all-gonna-work-out overconfidence, I searched the web for “rust macros tutorial” and landed on The Little Book of Rust Macros originally by Daniel Keep and updated by Lukas Wirth. After browsing this I understood a few facts about Rust macros:

They consist of rules, which match many types of single or repeated Rust tokens against patterns.2 So, I should be able to define rules that match the tokens that form the LC-3 assembly language.

They can pick out their inputs from among any other tokens. You provide these other tokens in the input matching rule, so you could do for example:

macro_rules! longhand_add {

($a:literal plus $b:literal) => { $a + $b };

}

let two = longhand_add!{ 1 plus 1 };This is apparently how you can create domain-specific languages with Rust macros, because the tokens you match don’t have to be legal Rust code; they just have to be legal tokens. In other words, plus is fine and doesn’t have to be the name of anything in the program; but foo[% is not.

They substitute their inputs into the Rust code that is in the body of the rule. So really, in the end, macros are a way of writing repetitive code without repeating yourself.

A tangent about C macros

This last fact actually corresponds with one of the uses of C macros. C macros are useful for several purposes, for which the C preprocessor is a drastically overpowered and unsafe tool full of evil traps. Most of these purposes have alternative, less overpowered, techniques for achieving them in languages like Rust or even C++. First, compile-time constants:

#define PI 3.1416

for which Rust has constant expressions:

const PI: f64 = 3.1416;Second, polymorphic “functions”:

#define MIN(a, b) (a) <= (b) ? (a) : (b)

for which Rust has… well, actual polymorphic functions:

fn min<T: std::cmp::PartialOrd>(a: T, b: T) -> T {

if a <= b { a } else { b }

}Third, conditional compilation:

#ifdef WIN32

int read_key(void) {

// ...

}

#endif // WIN32

for which Rust has… also conditional compilation:

#[cfg(windows)]

fn read_key() -> i32 {

// ...

}Fourth, redefining syntax, as mentioned above:

#define plus +

int two = 1 plus 1;

which in C you should probably never do except as a joke. But in Rust (as in the longhand_add example from earlier) at least you get a clue about what is going on because of the the longhand_add!{...} macro name surrounding the custom syntax; and the plus identifier doesn’t leak out into the rest of your program.

Lastly, code generation, which is what we want to do here in the assembler. In C it’s often complicated and this tangent is already long enough, but if you’re curious, here and here is an example of using a C preprocessor technique called X Macros to generate code that would otherwise be repetitive to write. In C, code generation using macros is a way of trading off less readability (because macros are complicated) for better maintainability (because in repeated blocks of very similar code it’s easy to make mistakes without noticing.) I imagine in Rust the tradeoff is much the same.

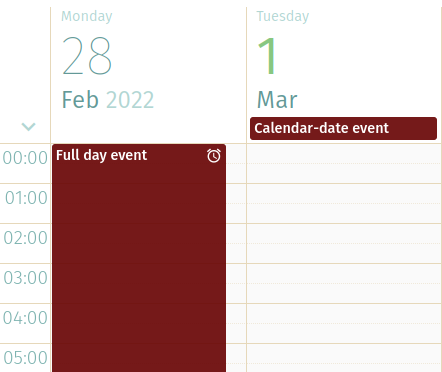

Designing an LC-3 assembler macro

You may remember in the previous post, in order to run a program with the VM, I had to write a small, separate Rust program to write the hand-assembled words to a file consisting of LC-3 bytecode. I could then load and run the file with the VM’s ld_img() method.

I would like to be able to write a file with the assembler macro, but I would also like to be able to write assembly language directly inline and execute it with the VM, without having to write it to a file. Something like this:

fn run_program() -> Result<()> {

let mut vm = VM::new();

vm.ld_asm(&asm! {

.ORIG x3000

LEA R0, hello ; load address of string

PUTS ; output string to console

HALT

hello: .STRINGZ "Hello World!\n"

.END

}?);

vm.start()

}My first thought was that I could have the asm macro expand to an array of LC-3 bytecodes. However, writing out a possible implementation for the VM.ld_asm() method shows that the asm macro needs to give two pieces of data: the origin address as well as the bytecodes.

pub fn ld_asm(&mut self, ???) {

let mut addr = ???origin???;

for inst in ???bytecodes??? {

self.mem[addr] = Wrapping(*inst);

addr += 1;

}

}So, it seemed better to have the asm macro expand to an expression that creates a struct with these two pieces of data in it. I started an assembler.rs submodule and called this object assembler::Program.

#[derive(Debug)]

pub struct Program {

origin: u16,

bytecode: Vec<u16>,

}

impl Program {

pub fn origin(&self) -> usize {

self.origin as usize

}

pub fn bytecode(&self) -> &[u16] {

&self.bytecode

}

}Next, I needed to figure out how to get from LC-3 assembly language to the data model of Program. Obviously I needed the address to load the program into (origin), which is set by the .ORIG directive. But I also needed to turn the assembly language text into bytecodes somehow. Maybe the macro could do this … but at this point, my hunch from reading about Rust macros was that the macro should focus on transforming the assembly language into valid Rust code, and not so much on processing. Processing can be done in a method of Program using regular Rust code, not macro code. So the macro should just extract the information from the assembly language: a list of instructions and their operands, and a map of labels to their addresses (“symbol table”).3

#[derive(Clone, Copy, Debug)]

pub enum Reg { R0, R1, R2, R3, R4, R5, R6, R7 }

#[derive(Debug)]

pub enum Inst {

Add1(/* dst: */ Reg, /* src1: */ Reg, /* src2: */ Reg),

Add2(/* dst: */ Reg, /* src: */ Reg, /* imm: */ i8),

And1(/* dst: */ Reg, /* src1: */ Reg, /* src2: */ Reg),

And2(/* dst: */ Reg, /* src: */ Reg, /* imm: */ i8),

// ...etc

Trap(u8),

}

pub type SymbolTable = MultiMap<&'static str, u16>;With all this, here’s effectively what I want the macro to extract out of the sample program I listed near the beginning of the post:

let origin: u16 = 0x3000;

let instructions = vec![

Inst::Lea(Reg::R0, "hello"),

Inst::Trap(0x22),

Inst::Trap(0x25),

Inst::Stringz("Hello world!\n"),

];

let symtab: SymbolTable = multimap!(

"hello" => 0x3003,

);(Remember from Part 1 that PUTS and HALT are system subroutines, called with the TRAP instruction.)

As the last step of the macro, I’ll then pass these three pieces of data to a static method of Program which will create an instance of the Program struct with the origin and bytecode in it:

Program::assemble(origin, &instructions, &symtab)You may be surprised that I picked a multimap for the symbol table instead of just a map. In fact I originally used a map. But it’s possible for the assembly language code to include the same label twice, which is an error. I found that handling duplicate labels inside the macro made it much more complicated, whereas it was easier to handle errors in the assemble() method. But for that, we have to store two copies of the label in the symbol table so that we can determine later on that it is a duplicate.

Demystifying the magic

At this point I still hadn’t sat down to actually write a Rust macro. Now that I knew what I want the macro to achieve, I could start.4

The easy part was that the assembly language code should start with the .ORIG directive, to set the address at which to load the assembled bytecode; and end with the .END directive. Here’s a macro rule that does that:

(

.ORIG $orig:literal

$(/* magic happens here: recognize at least one asm instruction */)+

.END

) => {{

use $crate::assembler::{Inst::*, Program, Reg::*, SymbolTable};

let mut instructions = Vec::new();

let mut symtab: SymbolTable = Default::default();

let origin: u16 = $orig;

$(

// more magic happens here: push a value into `instructions` for

// each instruction recognized by the macro, and add a label to

// the symbol table if there is one

)*

Program::assemble(origin, &instructions, &symtab)

}};Easy, right? The hard part is what happens in the “magic”!

You might notice that the original LC-3 assembly language’s .ORIG directive looks like .ORIG x3000, and x3000 is decidedly not a Rust numeric literal that can be assigned to a u16.

At this point I had to decide what tradeoffs I wanted to make in the macro. Did I want to support the LC-3 assembly language from the specification exactly? It looked like I might be able to do that, x3000-formatted hex literals and all, if I scrapped what I had so far and instead wrote a procedural macro5, which operates directly on a stream of tokens from the lexer. But instead, I decided that my goal would be to support a DSL that looks approximately like the LC-3 assembly language, without making the macro too complicated.

In this case, “not making the macro too complicated” means that hex literals are Rust hex literals (0x3000 instead of x3000) and decimal literals are Rust decimal literals (15 instead of #15). That was good enough for me.

Next I had to write a matcher that would match each instruction. A line of LC-3 assembly language looks like this:6

instruction := [ label : ] opcode [ operand [ , operand ]* ] [ ; comment ] \n

So I first tried a matcher like this:

$($label:ident:)? $opcode:ident $($operands:expr),* $(; $comment:tt)There are a few problems with this. The most pressing one is that “consume tokens until newline” is just not a thing in Rust macro matchers, so it’s not possible to ignore comments like this. Newlines are just treated like any other whitespace. There’s also no fragment specifier7 for “any token”; the closest is tt but that matches a token tree, which is not actually what I want here — I think it would mean the comment has to be valid Rust code, for one thing!

Keeping my tradeoff philosophy in mind, I gave up quickly on including semicolon-delimited comments in the macro. Regular // and /* comments would work just fine without even having to match them in the macro. Instead, I decided that each instruction would end with a semicolon, and that way I’d also avoid the problem of not being able to match newlines.

$($label:ident:)? $opcode:ident $($operands:expr),*;The next problem is that macro matchers cannot look ahead or backtrack, so $label and $opcode are ambiguous here. If we write an identifier, it could be either a label or an opcode and we won’t know until we read the next token to see if it’s a colon or not; which is not allowed. So I made another change to the DSL, to make the colon come before the label.

With this matcher expression, I could write more of the body of the macro rule:8

(

.ORIG $orig:literal;

$($(:$label:ident)? $opcode:ident $($operands:expr),*;)+

.END;

) => {{

use $crate::assembler::{Inst::*, Program, Reg::*, SymbolTable};

let mut instructions = Vec::new();

let mut symtab: SymbolTable = Default::default();

let origin: u16 = $orig;

$(

$(symtab.insert(stringify!($label), origin + instructions.len() as u16);)*

// still some magic happening here...

)*

Program::assemble(origin, &instructions, &symtab)

}};For each instruction matched by the macro, we insert its label (if there is one) into the symbol table to record that it should point to the current instruction. Then at the remaining “magic”, we have to insert an instruction into the code vector. I originally thought that I could do something like instructions.push($opcode($($argument),*));, in other words constructing a value of Inst directly. But that turned out to be impractical because the ADD and AND opcodes actually have two forms, one to do the operation with a value from a register, and one with a literal value. This means we actually need two different arms of the Inst enum for each of these instructions, as I listed above:

Add1(Reg, Reg, Reg),

Add2(Reg, Reg, i8),I could have changed it so that we have to write ADD1 and ADD2 inside the asm! macro, but that seemed to me too much of a tradeoff in the wrong direction; it meant that if you wanted to copy an LC-3 assembly language listing into the asm! macro, you’d need to go over every ADD instruction and rename it to either ADD1 or ADD2, and same for AND. This would be a bigger cognitive burden than just mechanically getting the numeric literals in the right format.

Not requiring a 1-to-1 correspondence between assembly language opcodes and the Inst enum also meant I could easily define aliases for the trap routines. For example, HALT could translate to Inst::Trap(0x25) without having to define a separate Inst::Halt.

But then what to put in the “magic” part of the macro body? It seemed to me that another macro expansion could transform LEA R0, hello into Inst::Lea(Reg::R0, "hello")! I read about internal rules in the Little Book, and they seemed like a good fit for this.

So, I replaced the magic with this call to an internal rule @inst:

instructions.push(asm! {@inst $opcode $($operands),*});And I wrote wrote a series of @inst internal rules, each of which constructs an arm of the Inst enum, such as:

(@inst ADD $dst:expr, $src:expr, $imm:literal) => { Add2($dst, $src, $imm) };

(@inst ADD $dst:expr, $src1:expr, $src2:expr) => { Add1($dst, $src1, $src2) };

// ...

(@inst HALT) => { Trap(0x25) };

// ...

(@inst LEA $dst:expr, $lbl:ident) => { Lea($dst, stringify!($lbl)) };Macro rules have to be written from most specific to least specific, so the rules for ADD first try to match against a literal in the third operand (e.g. ADD R0, R1, -1) and construct an Inst::Add2, and otherwise fall back to an Inst::Add1.

But unfortunately I ran into another problem here. The $lbl:ident in the LEA rule is not recognized. I’m still not 100% sure why this is, but the Little Book’s section on fragment specifiers says,

Capturing with anything but the

ident,lifetimeandttfragments will render the captured AST opaque, making it impossible to further match it with other fragment specifiers in future macro invocations.

So I suppose this is because we capture the operands with $($operands:expr),*. I tried capturing them as token trees (tt) but then the macro becomes ambiguous because token trees can include the commas and semicolons that I’m using for delimitation. So, I had to rewrite the rules for opcodes that take a label as an operand, like this:

(@inst LEA $dst:expr, $lbl:literal) => { Lea($dst, $lbl) };and now we have to write them like LEA R0, "hello" (with quotes). This is the one thing I wasn’t able to puzzle out to my satisfaction, that I wish I had been.

Finally, after writing all the @inst rules I realized I had a bug. When adding the address of a label into the symbol table, I calculated the current value of the program counter (PC) with origin + code.len(). But some instructions will translate into more than one word of bytecode: BLKW and STRINGZ.9 BLKW 8, for example, reserves a block of 8 words. This would give an incorrect address for a label occurring after any BLKW or STRINGZ instruction.

To fix this, I wrote a method for Inst to calculate the instruction’s word length:

impl Inst {

pub fn word_len(&self) -> u16 {

match *self {

Inst::Blkw(len) => len,

Inst::Stringz(s) => u16::try_from(s.len()).unwrap(),

_ => 1,

}

}

}and I changed the macro to insert the label into the symbol table pointing to the correct PC:10

$(

symtab.insert(

stringify!($lbl),

origin + instructions.iter().map(|i| i.word_len()).sum::<u16>(),

);

)*At this point I had something that looked and worked quite a lot like how I originally envisioned the inline assembler. For comparison, the original idea was to put the LC-3 assembly language directly inside the macro:

asm! {

.ORIG x3000

LEA R0, hello ; load address of string

PUTS ; output string to console

HALT

hello: .STRINGZ "Hello World!\n"

.END

}Along the way I needed a few tweaks to avoid making the macro too complicated, now I had this:

asm! {

.ORIG 0x3000;

LEA R0, "hello"; // load address of string

PUTS; // output string to console

HALT;

:hello STRINGZ "Hello World!\n";

.END;

}Just out of curiosity, I used cargo-expand (as I did in Part One) to expand the above use of the asm! macro, and I found it was quite readable:

{

use crate::assembler::{Inst::*, Program, Reg::*, SymbolTable};

let mut instructions = Vec::new();

let mut symtab: SymbolTable = Default::default();

let origin: u16 = 0x3000;

instructions.push(Lea(R0, "hello"));

instructions.push(Trap(0x22));

instructions.push(Trap(0x25));

symtab.insert("hello", origin + instructions.iter().map(|i| i.word_len()).sum::<u16>());

code.push(Stringz("Hello World!\n"));

Program::assemble(origin, &instructions, &symtab)

}Assembling the bytecode

I felt like the hard part was over and done with! Now all I needed was to write the Program::assemble() method. I knew already that the core of it would work like the inner loop of the VM in Part One of the series, only in reverse. Instead of using bitmatch to unpack the instruction words, I matched on Inst and used bitpack to pack the data into instruction words. Most of them were straightforward:

match inst {

Add1(dst, src1, src2) => {

let (d, s, a) = (*dst as u16, *src1 as u16, *src2 as u16);

words.push(bitpack!("0001_dddsss000aaa"));

}

// ...etcThe instructions that take a label operand needed a bit of extra work. I had to look up the label in the symbol table, compute an offset relative to the PC, and pack that into the instruction word. This process may produce an error: the label might not exist, or might have been given more than once, or the offset might be too large to pack into the available bits (in other words, the instruction is trying to reference a label that’s too far away.)

fn integer_fits(integer: i32, bits: usize) -> Result<u16, String> {

let shift = 32 - bits;

if integer << shift >> shift != integer {

Err(format!(

"Value x{:04x} is too large to fit in {} bits",

integer, bits

))

} else {

Ok(integer as u16)

}

}

fn calc_offset(

origin: u16,

symtab: &SymbolTable,

pc: u16,

label: &'static str,

bits: usize,

) -> Result<u16, String> {

if let Some(v) = symtab.get_vec(label) {

if v.len() != 1 {

return Err(format!("Duplicate label \"{}\"", label));

}

let addr = v[0];

let offset = addr as i32 - origin as i32 - pc as i32 - 1;

Self::integer_fits(offset, bits).map_err(|_| {

format!(

"Label \"{}\" is too far away from instruction ({} words)",

label, offset

)

})

} else {

Err(format!("Undefined label \"{}\"", label))

}

}The arm of the core match expression for such an instruction, for example LEA, looks like this:

Lea(dst, label) => {

let d = *dst as u16;

let o = Self::calc_offset(origin, symtab, pc, label, 9)

.unwrap_or_else(append_error);

words.push(bitpack!("1110_dddooooooooo"));

}Here, append_error is a closure that pushes the error message returned by calc_offset() into an array: |e| { errors.push((origin + pc, e)); 0 }

Lastly, a couple of arms for the oddball instructions that define data words, not code:

Blkw(len) => words.extend(vec![0; *len as usize]),

Fill(v) => words.push(*v),

Stringz(s) => {

words.extend(s.bytes().map(|b| b as u16));

words.push(0);

}At the end of the method, if there weren’t any errors, then we successfully assembled the program:

if words.len() > (0xffff - origin).into() {

errors.push((0xffff, "Program is too large to fit in memory".to_string()));

}

if errors.is_empty() {

Ok(Self {

origin,

bytecode: words,

})

} else {

Err(AssemblerError { errors })

}Bells and whistles

Next thing was to add a few extensions to the assembly language to make writing programs easier. (Writing programs is what I’m going to cover in Part 3 of the series.)

While researching the LC-3 for Part 1, I found a whole lot of lab manuals and other university course material. No surprise, since the LC-3 is originally from a textbook. One document I stumbled upon was “LC3 Language Extensions” from Richard Squier’s course material at Georgetown. In it are a few handy aliases for opcodes:

MOV R3, R5– copy R3 into R5; can be implemented asADD R3, R5, 0, i.e.R5 = R3 + 0ZERO R2– clear (store zero into) R2; can be implemented asAND R2, R2, 0INC R4– increment (add one to) R4; can be implemented asADD R4, R4, 1DEC R4– decrement (subtract one from) R4; can be implemented asADD R4, R4, -1

Besides these, the LC-3 specification itself names RET as an alias for JMP R7. Finally, an all-zero instruction word is a no-op so I defined an alias NOP for it.11

These are straightforward to define in the macro:

(@inst MOV $dst:expr, $src:expr) => { Add2($dst, $src, 0) };

(@inst ZERO $dst:expr) => { And2($dst, $dst, 0) };

(@inst INC $dst:expr) => { Add2($dst, $dst, 1) };

(@inst DEC $dst:expr) => { Add2($dst, $dst, -1) };

(@inst RET) => { Jmp(R7) };

(@inst NOP) => { Fill(0) };I wrote the last one as Fill(0) and not as Br(false, false, false, 0) which might have been more instructive, because Br takes a &' static str for its last parameter, not an address. So I would have had to make a dummy label in the symbol table pointing to address 0. Filling a zero word seemed simpler and easier.

The final improvement I wanted was to have AssemblerError print nice error messages. I kind of glossed over AssemblerError earlier, but it is an implementation of Error that contains an array of error messages with their associated PC value:

#[derive(Debug, Clone)]

pub struct AssemblerError {

errors: Vec<(u16, String)>,

}

impl error::Error for AssemblerError {}I implemented Display such that it would display each error message alongside a nice hex representation of the PC where the instruction failed to assemble:

impl fmt::Display for AssemblerError {

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

writeln!(f, "Error assembling program")?;

for (pc, message) in &self.errors {

writeln!(f, " x{:04x}: {}", pc, message)?;

}

Ok(())

}

}This still left me with a somewhat unsatisfying mix of kinds of errors. Ideally, the macro would catch all possible errors at compile time!

At compile time we can catch several kinds of errors:

// Unsyntactical assembly language program

asm!{ !!! 23 skidoo !!! }

// error: no rules expected the token `!`

HCF; // Nonexistent instruction mnemonic

// error: no rules expected the token `HCF`

ADD R3, R2; // Wrong number of arguments

// error: unexpected end of macro invocation

// note: while trying to match `,`

// (@inst ADD $dst:expr, $src:expr, $imm:literal) => { Add2($dst, $sr...

// ^

ADD R3, "R2", 5; // Wrong type of argument

// error[E0308]: mismatched types

// ADD R3, "R2", 5;

// ^^^^ expected `Reg`, found `&str`

// ...al) => { Add2($dst, $src, $imm) };

// ---- arguments to this enum variant are incorrect

// note: tuple variant defined here

// Add2(/* dst: */ Reg, /* src: */ Reg, /* imm: */ i8),

// ^^^^

BR R7; // Wrong type of argument again

// error: no rules expected the token `R7`

// note: while trying to match meta-variable `$lbl:literal`

// (@inst BR $lbl:literal) => { Br(true, true,...

// ^^^^^^^^^^^^These error messages from the compiler are not ideal — if I had written a dedicated assembler, I’d have made it output better error messages — but they are not terrible either.

Then there are some errors that could be caught at compile time, but not with this particular design of the macro. Although note that saying an error is caught at runtime is ambiguous here. Even if the Rust compiler doesn’t flag the error while processing the macro, we can still flag it at the time of the execution of assemble() — this is at runtime for the Rust program, but at compile time for the assembly language. It’s different from a LC-3 runtime error where the VM encounters an illegal opcode such as 0xDEAD during execution.

Anyway, this sample program contains one of each such error and shows the nice output of AssemblerError:

asm! {

.ORIG 0x3000;

LEA R0, "hello"; // label is a duplicate

PUTS;

LEA R0, "greet"; // label doesn't exist

PUTS;

LEA R0, "salute"; // label too far away to fit in offset

ADD R3, R2, 0x7f; // immediate value is out of range

HALT;

:hello STRINGZ "Hello World!\n";

:hello STRINGZ "Good morning, planet!\n";

BLKW 1024;

:salute STRINGZ "Regards, globe!\n";

BLKW 0xffff; // extra space makes the program too big

.END;

}?

// Error: Error assembling program

// x3000: Duplicate label "hello"

// x3002: Undefined label "greet"

// x3004: Label "salute" is too far away from instruction (1061 words)

// x3005: Value x007f is too large to fit in 5 bits

// xffff: Program is too large to fit in memoryTo check that all the labels are present only once, you need to do two passes on the input. In fact, the macro effectively does do two passes: one in the macro rules where it populates the symbol table, and one in assemble() where it reads the values back out again. But I don’t believe it’d be possible to do two passes in the macro rules themselves, to get compile time checking for this.

The out-of-range value in ADD R3, R2, 0x7f is an interesting case though! This could be caught at compile time if Rust had arbitrary bit-width integers.12 After all, TRAP -1 and TRAP 0x100 are caught at compile time because the definition of Inst::Trap(u8) does not allow you to construct one with those literal values.

I tried using types from the ux crate for this, e.g. Add2(Reg, Reg, ux::i5). But there is no support for constructing custom integer types from literals, so I would have had to use try_from() in the macro — in which case I wouldn’t get compile time errors anyway, so I didn’t bother.

My colleague Andreu Botella suggested that I could make out-of-range immediate values a compile time error by using a constant expression — something I didn’t know existed in Rust.

(@int $bits:literal $num:literal) => {{

const _: () = {

let converted = $num as i8;

let shift = 8 - $bits;

if converted << shift >> shift != converted {

panic!("Value is too large to fit in bitfield");

}

};

$num as i8

}};

(@inst ADD $dst:expr, $src:expr, $imm:literal) => { Add2($dst, $src, asm!(@int 5 $imm)) };I found this really clever! But on the other hand, it made having a good error message quite difficult. panic! in a constant expression cannot format values, it can only print a string literal. So you get a compile time error like this:

error[E0080]: evaluation of constant value failed

asm! { .ORIG 0x3000; ADD R0, R0, 127; .END; }.unwrap_err();

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ the evaluated program panicked at 'Value is too large to fit in bitfield'

The whole assembly language program is highlighted as the source of the error, and the message doesn’t give any clue which value is problematic. This would make it almost impossible to locate the error in a large program with many immediate values. For this reason, I decided not to adopt this technique. I found the runtime error preferable because it gives you the address of the instruction, as well as the offending value. But I did learn something!

Conclusion

At this point I was quite happy with the inline assembler macro! I was able to use it to write programs for the LC-3 without having to calculate label offsets by hand, which is all I really wanted to do in the first place. Part 3 of the series will be about some programs that I wrote to test the VM (and test my understanding of it!)

I felt like I had successfully demystified Rust macros for myself now, and would be able to write another macro if I needed to. I appreciated having the chance to gain that understanding while working on a personal project that caught my interest.13 This is — for my learning style, at least — my ideal way to learn. I hope that sharing it helps you too.

Finally, you should know that this writeup presents an idealized version of the process. In 2020 I wrote a series of posts where I journalled writing short Rust programs, including mistakes and all, and those got some attention. This post is not that. I tried many different dead ends while writing this, and if I’d chronicled all of them this post would be even longer. Here, I’ve tried to convey approximately the journey of understanding I went through, while smoothing it over so that it makes — hopefully — a good read.

Many thanks to Andreu Botella, Angelos Oikonomopoulos, and Federico Mena Quintero who read a draft of this and with their comments made it a better read than it was before.

[1] As might be the smarter, and certainly the more conventional, thing to do ↩

[2] Tokens as in what a lexer produces: identifiers, literals, … ↩

[3] A symbol table usually means something different, a table that contains information about a program’s variables and other identifiers. We don’t have variables in this assembly language; labels are the only symbols there are ↩

[4] What actually happened, I just sat down and started writing and deleting and rewriting macros until I got a feel for what I needed. But this blog post is supposed to be a coherent story, not a stream-of-consciousness log, so let’s pretend that I thought about it like this first ↩

[5] Conspicuously, still under construction in the Little Book ↩

[6] This isn’t a real grammar for the assembly language, just an approximation ↩

[7] Fragment specifiers, according to the Little Book, are what the expr part of $e:expr is called ↩

[8] Notice that .ORIG and .END now have semicolons too, but no labels; they aren’t instructions, so they can’t have an address ↩

[9] Eagle-eyed readers may note that in the LC-3 manual these are called .BLKW and .STRINGZ, with leading dots. I elided the dots, again to make the macro less complicated ↩

[10] This now seems wasteful rather than keeping the PC in a variable. On the other hand, it seems complicated to add a bunch of local variables to the repeated part of the macro ↩

[11] In fact, any instruction word with a value between 0x0000-0x01ff is a no-op. This is a consequence of BR‘s opcode being 0b0000, which I can only assume was chosen for exactly this reason. BR, with the three status register bits also being zero, never branches and always falls through to the next instruction ↩

[12] Or even just i5 and i6 types ↩

[13] Even if it took 1.5 years to complete it ↩